Install Dotnet Spark on Ubuntu¶

Warning

Please go to "Running Dotnet Spark applications on Ubuntu Continer" for running dotnet spark applicatoin on Docker

Install Dotnet core¶

sudo apt-get update; \

sudo apt-get install -y apt-transport-https && \

sudo apt-get update && \

sudo apt-get install -y aspnetcore-runtime-2.1

sudo apt-get update; \

sudo apt-get install -y apt-transport-https && \

sudo apt-get update && \

sudo apt-get install -y dotnet-sdk-3.1

Tip

If run into problem, 1. The repository 'http://dl.google.com/linux/chrome/deb stable InRelease' is no longer signed. N: Updating from such a repository can't be done securely, and is therefore disabled by default.

Solution:

- An error occurred during the signature verification. The repository is not updated and the previous index files will be used. GPG error: https://dl.yarnpkg.com/debian stable InRelease: The following signatures couldn't be verified because the public key is not available: NO_PUBKEY 23E7166788B63E1E Solution: :::

Install OpenJDK¶

Install Apache Maven 3.6.3+¶

mkdir -p ~/bin/maven

cd ~/bin/maven

wget https://www-us.apache.org/dist/maven/maven-3/3.6.3/binaries/apache-maven-3.6.3-bin.tar.gz

tar -xvzf apache-maven-3.6.3-bin.tar.gz

ln -s apache-maven-3.6.3 current

export M2_HOME=~/bin/maven/current

export PATH=${M2_HOME}/bin:${PATH}

source ~/.bashrc

Install Apache Spark 2.4.1¶

mkdir -p ~/bin/spark-2.4.1-bin-hadoop2.7

wget https://archive.apache.org/dist/spark/spark-2.4.1/spark-2.4.1-bin-hadoop2.7.tgz

tar -xvzf spark-2.4.1-bin-hadoop2.7.tar.gz

export SPARK_HOME=~/bin/spark-2.4.1-bin-hadoop2.7

export PATH="$SPARK_HOME/bin:$PATH"

source ~/.bashrc

Install Microsoft.Spark.Worker¶

mkdir -p ~/bin/Microsoft.Spark.Worker

wget https://github.com/dotnet/spark/releases/download/v0.12.1/Microsoft.Spark.Worker.netcoreapp3.1.linux-x64-0.12.1.tar.gz

tar -xvzf Microsoft.Spark.Worker.netcoreapp3.1.linux-x64-0.12.1.tar.gz --directory ~/bin/Microsoft.Spark.Worker

export DOTNET_WORKER_DIR=~/bin/Microsoft.Spark.Worker

source ~/.bashrc

Clone sample application¶

Building Spark .NET Scala Extensions Layer¶

When you submit a .NET application, Spark .NET has the necessary logic written in Scala that inform Apache Spark how to handle your requests (e.g., request to create a new Spark Session, request to transfer data from .NET side to JVM side etc.). This logic can be found in the Spark .NET Scala Source Code.

Let us now build the Spark .NET Scala extension layer. This is easy to do:

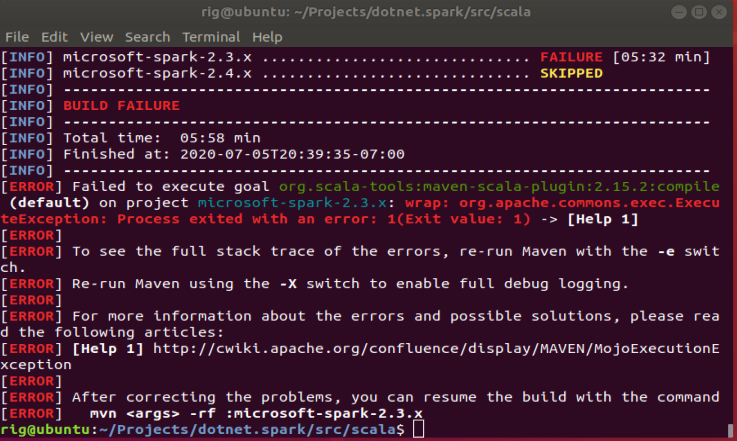

Ran into problem:

You should see JARs created for the supported Spark versions: * microsoft-spark-2.3.x/target/microsoft-spark-2.3.x-

Building .NET Sample Applications using .NET Core CLI¶

- Build the Worker

- Build the Samples

Warning

Let's move on to Docker Continer rather