Running Dotnet Spark applications on Ubuntu Continer¶

Let's create the first console application called HelloSpark¶

-

Create console Application

-

Add nuget package Microsoft.Spark

-

Let open in VS Code and add some code to it

and add following code ion Program.cs -

Add person.js file

-

Change .csproj file to copy json file to output directory ```xml{10-12}

dotnet build

Text Only

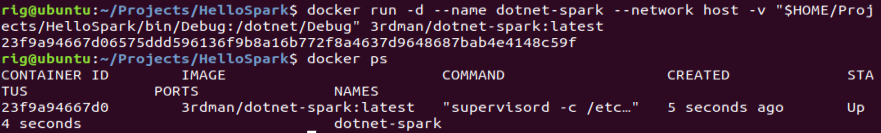

Docker containe should be running at this point 7. Run the application in the docker container with network mapped to host

```bash

docker run -d --name dotnet-spark --network host -v "$HOME/Projects/HelloSpark/bin/Debug:/dotnet/Debug" 3rdman/dotnet-spark:latest

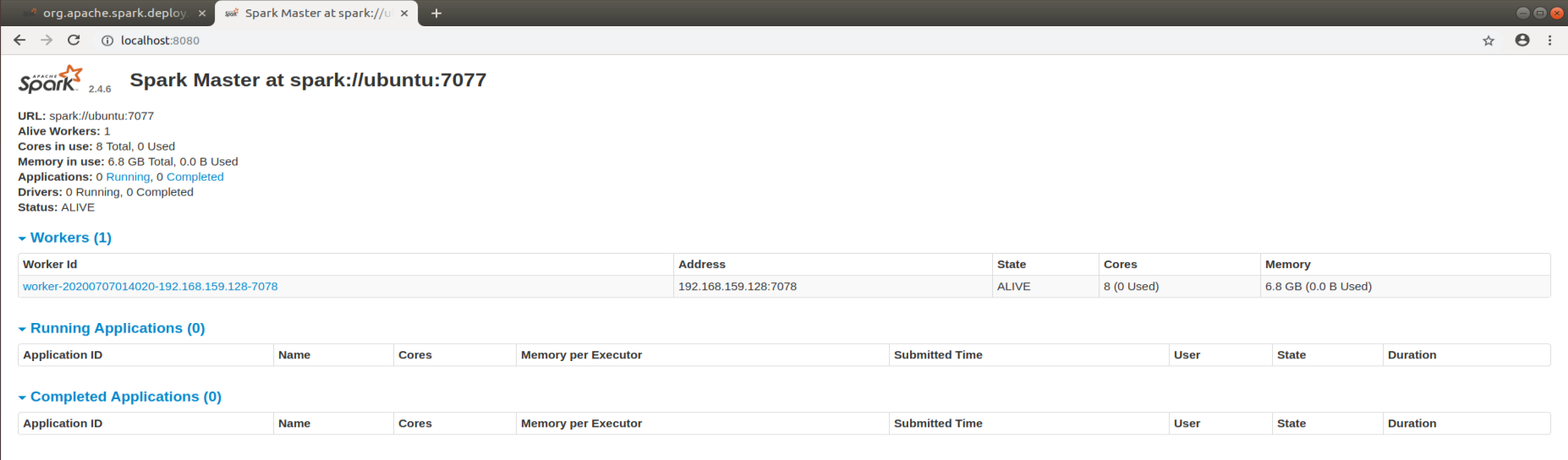

Other option would be to run with port mapping

Bash

where port 8080 (spark-master), 8081 (spark-slave) 5567 (backend-debugging) and 4040 (Spark UI) docker run -d --name dotnet-spark -p 8080:8080 -p 8081:8081 -p 5567:5567 -p 4040:4040 -v "$HOME/Projects/HelloSpark/bin/Debug:/dotnet/Debug" 3rdman/dotnet-spark:latest

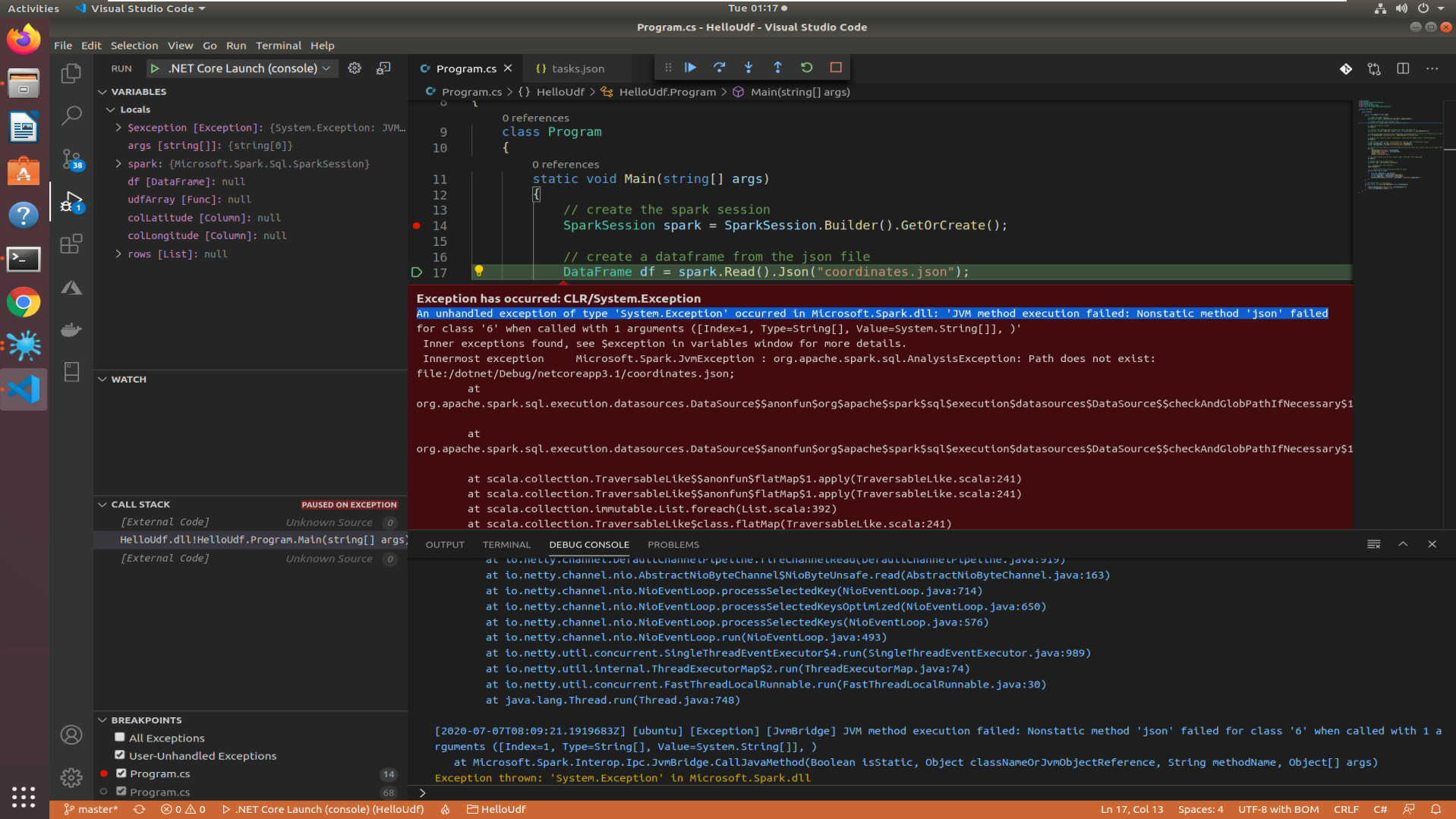

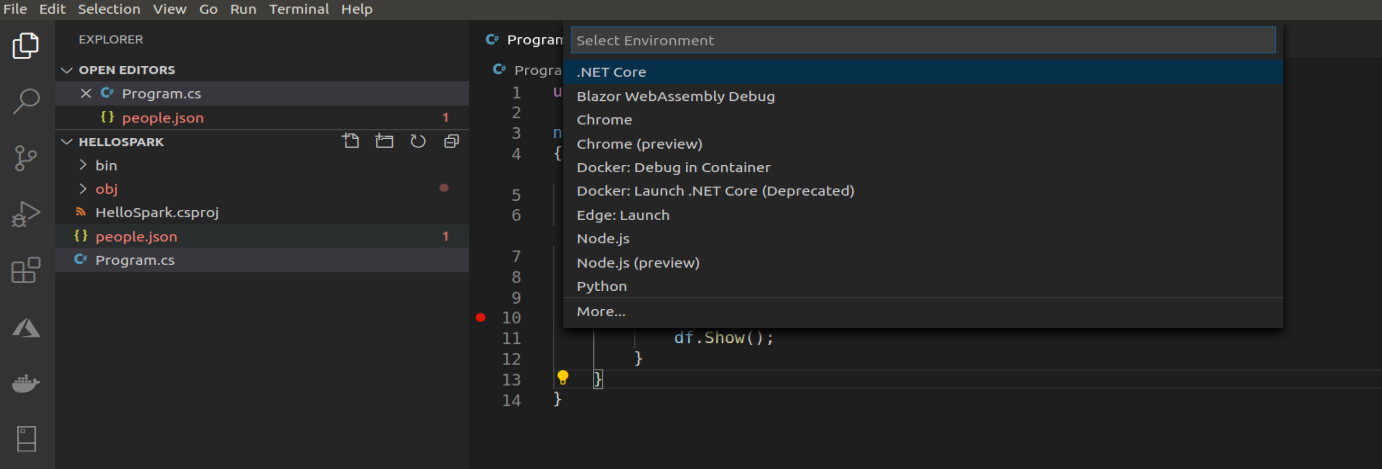

- Configure VS Code to debug the application launch.json JSONWhen we hit debug it might say the build task is note avialable, it will ask to create one, choose .Net Core

{ // Use IntelliSense to learn about possible attributes. // Hover to view descriptions of existing attributes. // For more information, visit: https://go.microsoft.com/fwlink/?linkid=830387 "version": "0.2.0", "configurations": [ { "name": ".NET Core Launch (console)", "type": "coreclr", "request": "launch", "preLaunchTask": "build", // If you have changed target frameworks, make sure to update the program path. "program": "${workspaceFolder}/bin/Debug/netcoreapp3.1/HelloSpark.dll", "args": [], "cwd": "${workspaceFolder}", // For more information about the 'console' field, see https://aka.ms/VSCode-CS-LaunchJson-Console "console": "internalConsole", "stopAtEntry": false, "logging": { "moduleLoad": false } }, { "name": ".NET Core Attach", "type": "coreclr", "request": "attach", "processId": "${command:pickProcess}" } ] }

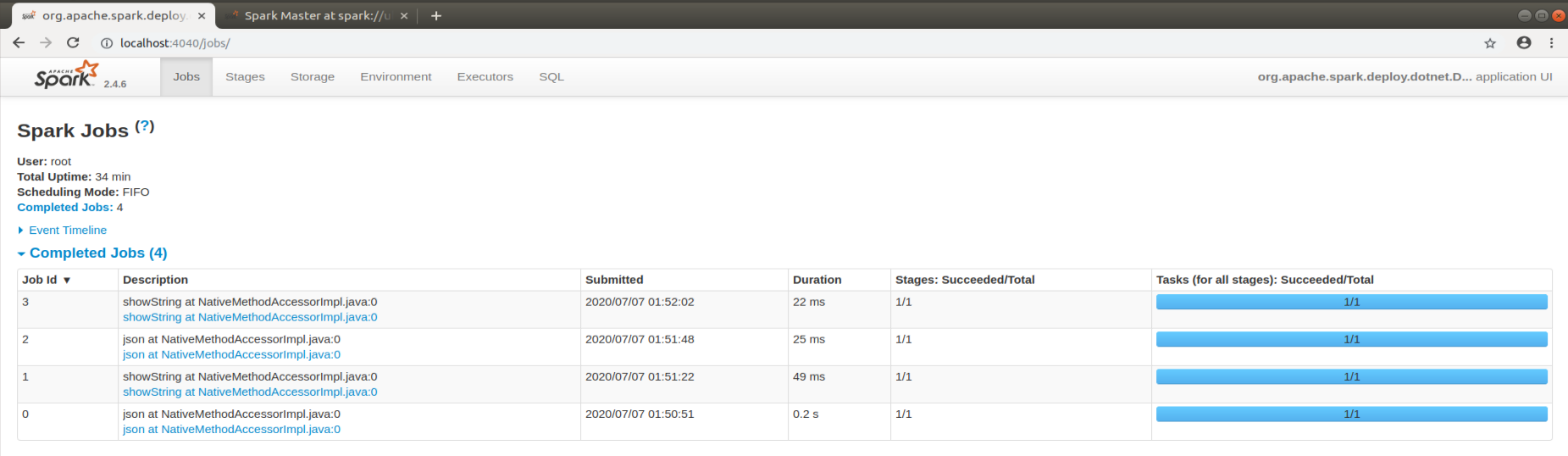

Awesome, we could see now we are able to run and debug the application

Tip

Warning

While debugging on VS Code in Ubuntu use only one instance otherwise debugger won't work.

Warning

TODO: Investigate why the execution is looking for json file at '/dotnet/Debug/netcoreapp3.1/' for other project Project URL

Commands to execute after cloning: